Ads Are Coming to ChatGPT: How Brands Should Prepare to Measure What Matters

- Brady Hancock

- Jan 17

- 6 min read

On January 16, 2026, OpenAI officially announced that it will begin testing advertising inside ChatGPT, initially for free users and subscribers of the new ChatGPT Go plan in the United States. This announcement is part of the company’s broader rollout of the ChatGPT Go subscription tier, priced at $8 per month, with ads expected to begin rolling out in the coming weeks once testing starts.

📌 Read the official announcement from OpenAI:

While ads are beginning in a limited test, the wider implications for digital marketing and performance measurement are significant.

New platforms almost always create the same problem: brands rush to spend before they’re ready to measure impact. When reporting lags behind adoption, teams are left guessing whether results are incremental, inflated, or misattributed.

If ChatGPT ads become a meaningful acquisition channel, organizations that prepare their measurement strategy now will have a clear advantage once beta ends.

Why ChatGPT Ads Are Different From Traditional Paid Channels

Unlike search or social platforms, ChatGPT lives inside a conversational environment. That changes how users discover brands, how intent is expressed, and how influence happens.

This creates several measurement challenges:

No traditional SERP or feed context

Fewer obvious “click paths”

Higher likelihood of assisted or delayed conversions

Increased risk of last-click misattribution

If measurement relies solely on default attribution models or platform-reported metrics, early ChatGPT ad performance will almost certainly be misunderstood.

The Common Pitfall of Being an Early Adopter

Most organizations focus on whether they should test new ad inventory. They often forget to ask themselves

"How will we accurately measure performance of this new channel?"

Without preparation, teams risk:

Over-crediting ChatGPT for conversions it assisted but didn’t cause

Under-valuing it because impact shows up later or elsewhere

Making budget decisions based on incomplete or misleading data

Early adoption without measurement maturity often leads to false conclusions—either pulling spend too early or scaling something that isn’t truly incremental.

What Brands Should Put in Place Before ChatGPT Ads Exit Beta

Before launching campaigns, organizations should focus on measurement readiness. That means going beyond strategy and ensuring tracking, attribution, and reporting foundations are in place before campaign launch date

1. Configure Conversion Tracking Around Real Business Outcomes

New channels often inherit whatever conversion tracking already exists—whether it’s correct or not.

Before ChatGPT ads launch broadly, brands should:

Audit which analytics events truly represent meaningful business actions

Eliminate duplicate, inflated, or low-quality “conversion” events

Ensure conversions align with funnel stage, not just surface-level engagement

If conversion tracking isn’t clean before testing begins, results from ChatGPT ads will be unreliable from day one.

2. Define Attribution Logic Inside Analytics Tools — Including Organic vs Paid LLM Traffic

As AI-driven discovery grows, one of the biggest upcoming challenges will be distinguishing organic LLM influence from paid LLM ads.

ChatGPT and other LLMs can influence users in multiple ways:

Organic brand mentions or recommendations in AI responses

Paid placements within AI-driven experiences

Assisted influence that doesn’t result in an immediate click

Before ChatGPT ads scale, organizations should proactively define:

How paid ChatGPT traffic will be identified and classified in analytics

How organic LLM-driven traffic will be grouped or labeled

Which attribution models will be used for evaluation (first-touch, data-driven, blended views)

How assisted conversions from both paid and organic LLM sources can be assessed

Without clear definitions, brands risk blending organic AI influence with paid performance—making it impossible to understand true return on ad spend or incremental lift.

Defining this logic before campaigns launch ensures analytics reporting remains consistent, defensible, and actionable as LLM traffic grows.

3. Prepare Reporting Dashboards Before Spend Scales

One of the most common mistakes teams make with new channels is building reporting after results are requested.

Instead, brands should prepare dashboards that:

Separate direct impact from assisted influence

Show performance across multiple attribution views

Contextualize ChatGPT ads alongside existing channels

Pre-built reporting ensures early learnings are consistent, trustworthy, and aligned with how leadership evaluates performance.

4. Plan for Incrementality, Not Just Attribution

Attribution explains where credit goes. Incrementality answers whether the channel caused lift at all.

As ChatGPT ads mature, the most reliable insights will come from:

Holdout or geo-based testing

Budget-on vs budget-off comparisons

Conversion lift analysis over time

Brands that plan incrementality testing alongside early experiments will make smarter scaling decisions than those relying on attribution alone.

What “Success” Will Actually Look Like

ChatGPT ads are unlikely to behave like search or social—and that’s expected.

For many organizations, success will show up as:

Higher-quality inbound demand

Faster downstream conversions

Strong assisted-conversion influence

Improved performance when paired with other channels

The goal isn’t perfect attribution. It’s clear, confident decision-making.

Final Thought: Measurement to Support the Early-Adopter Advantage

When new ad platforms emerge, spend is easy. Clarity is hard.

Brands that take the time now to:

Configure conversion tracking correctly

Define attribution logic intentionally

Distinguish organic vs paid LLM traffic in GA4 or other analytics reports

Prepare reporting Looker Studio dashboards in advance

...will be able to evaluate ChatGPT ads with confidence—and scale based on a multitude of KPIs, not noise.

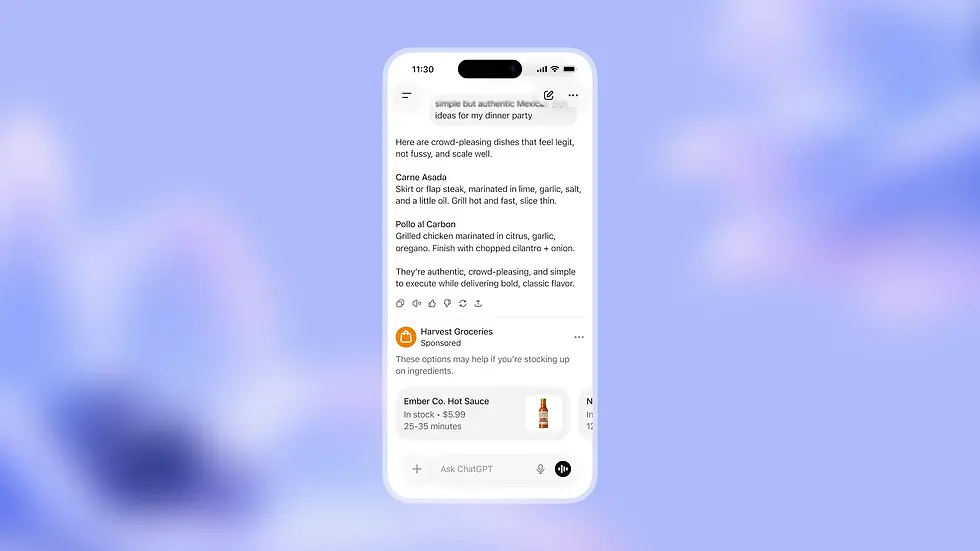

What ChatGPT Ads Will Look Like

While ChatGPT ads are not live yet, OpenAI has shared clear guidance on how ads will be introduced during the initial testing phase.

According to OpenAI, ads will begin testing in the coming weeks for logged-in adults in the U.S. on the Free and ChatGPT Go tiers.

During early tests, ads are expected to:

Appear at the bottom of ChatGPT responses

Only show when there is a relevant sponsored product or service based on the conversation

Be clearly labeled as Sponsored

Be visually separated from the organic AI response

Allow users to dismiss ads or see why they’re being shown

Exclude users under 18

Exclude sensitive or regulated topics such as health, mental health, and politics

In other words, ads are designed to feel contextual, optional, and non-intrusive, rather than interruptive. Why This Matters for Measurement

Because ads appear after the primary AI response, they’re likely to function more like:

• A recommendation

• A follow-up option

• Or an assistive discovery mechanism

—not a traditional interruptive ad.

That has major implications for measurement:

• Click-through rates may be lower, but intent quality may be higher

• Conversions may happen later or through different channels

• Performance may show up more often as assisted conversions, not last-click wins

This makes it even more important for brands to:

• Configure conversion tracking correctly

• Define attribution logic in advance

• Separate organic LLM influence from paid placements

• Prepare reporting that reflects assisted impact, not just direct response

What We Still Don’t Know Yet

While OpenAI has outlined how ads will appear during early testing—clearly labeled, shown at the bottom of responses, and limited to relevant, non-sensitive contexts—many of the most important measurement and performance details are still undefined.

Those unknowns are where most brands will feel friction.

Some of the key open questions include:

How traffic will be attributed downstream

It’s still unclear what referral data, parameters, or identifiers will be passed when users interact with ChatGPT ads—and how consistently that data will appear across analytics platforms.

How reporting will evolve during and after beta

Early-stage ad platforms often provide limited or shifting reporting capabilities. What metrics advertisers will have access to—and how stable those metrics will be over time—remains to be seen.

How organic and paid LLM influence will coexist long-term

While ads will be clearly labeled, users may still encounter brands organically through AI responses before or after seeing a paid placement. How often those touchpoints overlap—and how to measure them separately—will be an ongoing challenge.

How well existing attribution models will translate

Most attribution frameworks weren’t designed for conversational, assistive environments. Whether current models can accurately reflect influence from ChatGPT ads—or whether new approaches will be needed—is still unknown.

What “success” benchmarks will look like

Without historical data or established norms, early performance may be difficult to contextualize. CTRs, conversion rates, and ROAS may not resemble those from search or social channels.

What is clear is that ChatGPT ads are designed to be contextual and assistive, not interruptive. That alone suggests performance will surface differently than traditional paid media.

Brands that wait for every unknown to be resolved before preparing will be reacting to change instead of learning from it. The teams that succeed will be the ones with measurement systems flexible enough to adapt as the channel—and its reporting—evolves.

Preparing for ChatGPT Ads? Measure Marketing Pro is here to ensure you have a plan to measure performance in place!

Comments